PostgreSQL.null_text_to_empty_string

- PostgreSQL.null_text_to_empty_string(table_name, column_names=None, schema_name=None)[source]

Convert null values (in text columns) to empty strings.

- Parameters:

table_name (str) – name of a table

column_names (str | list | None) – (list of) column name(s); when

column_names=None(default), all available columns are includedschema_name (str) – name of a schema

Examples:

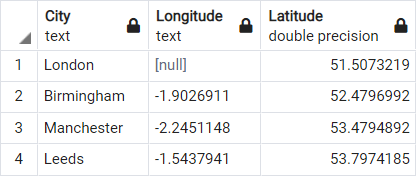

>>> from pyhelpers.dbms import PostgreSQL >>> from pyhelpers._cache import example_dataframe >>> testdb = PostgreSQL('localhost', 5432, 'postgres', database_name='testdb') Password (postgres@localhost:5432): *** Creating a database: "testdb" ... Done. Connecting postgres:***@localhost:5432/testdb ... Successfully. >>> dat = example_dataframe() >>> dat['Longitude'] = dat['Longitude'].astype(str) >>> dat.loc['London', 'Longitude'] = None >>> dat Longitude Latitude City London None 51.507322 Birmingham -1.9026911 52.479699 Manchester -2.2451148 53.479489 Leeds -1.5437941 53.797418 >>> tbl_name = 'test_table' >>> testdb.import_data(data=dat, table_name=tbl_name, index=True, verbose=True) To import data into "public"."test_table" at postgres:***@localhost:5432/postgres ? [No]|Yes: yes >>> testdb.table_exists(table_name=tbl_name) True >>> testdb.get_column_dtype(table_name=tbl_name) {'City': 'text', 'Longitude': 'text', 'Latitude': 'double precision'} >>> dat_ = testdb.read_table(tbl_name) >>> dat_.loc[0, 'Longitude'] is None True

Fig. 15 The table “test_table” in the database “testdb”.

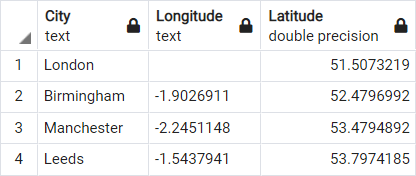

>>> # Replace the 'null' value with an empty string >>> testdb.null_text_to_empty_string(table_name=tbl_name) >>> dat_ = testdb.read_table(tbl_name) >>> dat_.loc[0, 'Longitude'] ''

Fig. 16 The table “test_table” in the database “testdb” (after converting ‘null’ to empty string).

>>> # Delete the database "testdb" >>> testdb.drop_database(verbose=True) To drop the database "testdb" from postgres:***@localhost:5432 ? [No]|Yes: yes Dropping "testdb" ... Done.